In paid traffic, guessing is expensive. The difference between a high-performing ad and a flop often comes down to testing. That’s where split testing—also known as A/B testing—comes in. It’s one of the most reliable methods for improving your campaigns using data instead of assumptions. In this article, you’ll learn what split testing is, how it works, and how to use it to boost your ROI.

What Is Split Testing?

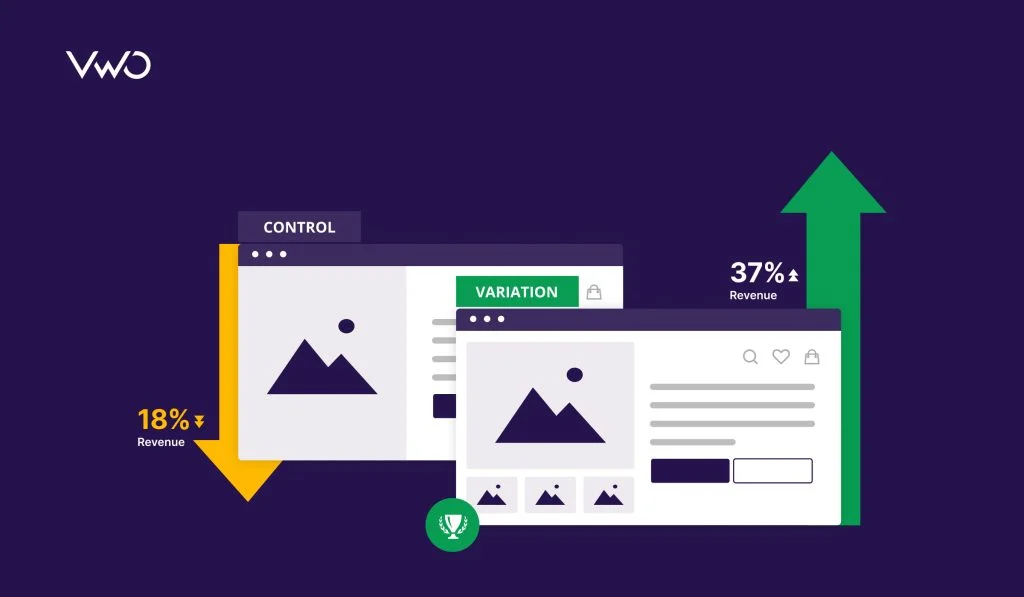

Split testing is the process of comparing two or more versions of an ad, landing page, or campaign element to see which performs better. Each variation (A, B, C…) is shown to a portion of your audience, and the results determine the winner based on a defined goal (clicks, conversions, etc.).

Common Elements to A/B Test

- Headlines: Short vs long, benefits vs curiosity

- Ad copy: Emotional vs logical tone

- Images vs videos: Or different styles of creative

- Call to Action (CTA): “Buy Now” vs “Get Yours”

- Landing page layout: Button placement, testimonials, color schemes

- Audience segments: Age groups, interests, lookalikes

Each test gives you insight into what your audience actually responds to.

How A/B Testing Works

- You create two (or more) versions of the same ad or landing page

- You split your audience evenly across variations

- You run the test for a set time or until statistical significance

- You compare performance (CTR, conversions, cost per result)

- You scale the winning version and archive the rest

Most platforms (Meta, Google, TikTok) support native A/B testing tools.

Platforms That Support Split Testing

- Meta Ads (Facebook/Instagram): A/B Test tool in Experiments

- Google Ads: Campaign Experiments and Ad Variations

- TikTok Ads: Custom split test setup using multiple ad groups

- LinkedIn Ads: Use separate campaigns for structured testing

- Landing page tools: Unbounce, Leadpages, Instapage

Best Practices for A/B Testing

- Test one variable at a time

- Keep test groups equal in size and targeting

- Run tests for at least 3–7 days depending on budget

- Focus on meaningful metrics (not just CTR—track conversions)

- Use statistical significance calculators if needed

- Archive old tests and document your learnings

What to Avoid

- Testing too many elements at once

- Stopping a test too early

- Using small budgets with low traffic volume

- Ignoring audience behavior and engagement quality

- Assuming winners will always perform the same when scaled

Final Thoughts: Data > Guesswork

Split testing is not optional—it’s essential. In paid traffic, small changes can create big improvements. When you consistently test and optimize, you reduce waste, increase conversions, and build smarter campaigns. Always be testing, always be improving.